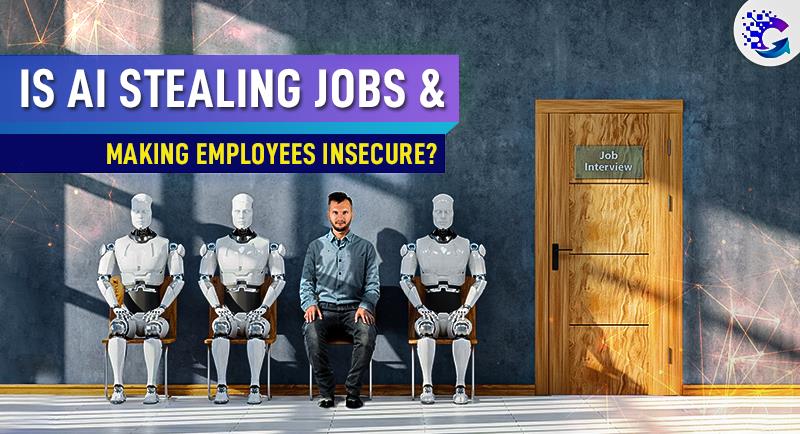

What happens when your desk partner is a machine that learns, adapts, and predicts; and you begin to wonder whether you still have a place in your own workplace?

Do we risk becoming redundant cogs, or might we actually be thrust into danger zones because our “co-worker” is artificial intelligence (AI)? In a world where code writes code, algorithms anticipate demand, and robotic arms operate alongside humans, these questions compel urgent scrutiny: Is AI stealing jobs, and even more worryingly; making employees unsafe?

From headlines warning of mass unemployment to subtle anxieties whispered in break rooms, the narrative is one of disruption. The impact of AI depends not just on what the technology can do, but how organizations adopt it, how workers respond, and how safety is managed in increasingly hybrid human-machine workplaces.

Many organizations are also consulting with top AI companies in India for the improvement of their workspaces.

Part I: The Myth (or Reality?) of AI Job Theft

1. The feared apocalypse: AI as job-eater

It is easy to conjure a dystopian vision: chatbots replacing call center agents, AI coders supplanting junior developers, robotic systems eliminating factory line workers. Many analysts and media commentators argue that AI is poised to amplify the speed and scale of job disruption compared to past waves of automation

Such speculation has led to wide predictions. For instance, that a significant fraction of jobs globally could be affected by 2030, with some estimates of up to 30 percent automation potential. The anxiety is magnified when leaders suggest that entry-level white-collar roles are especially vulnerable in just a handful of years.

2. What Yale’s study uncovers

Yet, fresh empirical evidence tempers that alarm. A recent study by Yale’s Budget Lab, analyzing 33 months of U.S. labor data since ChatGPT’s public debut, finds little evidence to support widespread job displacement attributable to AI.

The occupational mix, the share of workers in different types of roles — has not changed more rapidly in the AI era than during earlier technological waves, such as the spread of computers or the internet.

When researchers grouped workers into high, medium, and low AI exposure categories, their representation in the labor force remained fairly stable. Even among recent college graduates, changes in job distributions appeared similar across cohorts, suggesting broad labor market conditions, rather than AI — drive entry-level volatility.

In short, while anxiety is high, current data suggest that AI so far is more a disruptor of tasks within jobs, rather than wholesale displacer of jobs.

Recommended reading: In Just one Year, Google turns AI setbacks into Dominance

3. A middle ground: transformation, not annihilation

The more defensible view is that AI is reshaping work rather than obliterating it. A stydy analysis frames AI as both a threat and an enabler: it will automate tedious processes, but also create new roles in AI ethics, prompt engineering, model fine-tuning, human-AI interface design, and more.

But for many workers, the transition is uneasy: partial automation may mean that their job changes radically — fewer routine tasks, more oversight, more cognitive demands. Some may find those shifts disorienting or perilous if the support to retrain or adapt is weak.

Many organizations are consulting with the top AI company in India and are implanting partial automation.

Part II: The Darker Side, Safety Risks in AI-Enabled Workplaces

Even if AI is not yet a mass terminator of jobs, it brings new kinds of risks. When humans and AI systems coexist in physical or decision-critical environments, “making employees unsafe” is not a metaphor, it’s a serious challenge.

1. Removing humans from dangerous tasks

One of the hopeful arguments is that AI-enabled robots or drones can take over hazardous work: inspecting pipelines, monitoring confined spaces, handling toxic chemicals, or moving heavy loads. In industries like mining, petrochemicals, or nuclear facilities, AI-controlled systems may reduce human exposure to the worst dangers.

For example, AI-driven robots can adapt their movements based on human proximity, reducing collision risk.

When used thoughtfully, AI can act as a safety multiplier, freeing humans from repetitive strain, fatigue, or error-prone tasks. Predictive analytics may spot conditions likely to lead to accidents before they occur (e.g., fatigue, heat, proximity to hazards).

Here, the best AI company in India comes forward and may help your employees to get rid of boring, repetitive works with its AI approaches.

2. Algorithmic hazards and unpredictability

Yet AI comes with its own dangers. Machine learning systems can behave unpredictably in corner cases or scenarios outside training data. If a safety-critical AI misclassifies an anomaly or fails to adapt to changed conditions, the consequences may be dire.

The “black box” nature of many AI models complicates transparency and trust: workers may not understand why an AI made a certain decision, or how to override it.

In human-AI collaborative settings, mismatches of expectations can yield “automation surprises,” where human operators misinterpret AI output or over-trust it. That cognitive dissonance can lead to mistakes. Research into human-AI collaboration and employee safety (from a job demands–resources perspective) underscores that AI can both support and hinder safety performance depending on design, transparency, and workload.

3. Surveillance, stress, and psychosocial risks

Beyond physical hazards, AI-driven workplace monitoring brings psychosocial risks. Surveillance tools, computer vision, wearable sensors, or algorithmic performance tracking may create feelings of being watched, evaluated, or micromanaged.

Employee privacy can suffer, and trust can erode. In some implementations, AI becomes a tool to push employees harder: if performance dips, algorithms may flag slowdowns, triggering managerial intervention. Anonymous reports suggest that AI gets used as an excuse for increased attrition or pressure: “You aren’t good enough — or you aren’t using AI well enough.” =

Additionally, job insecurity (real or perceived) may rise. Workers may become anxious, overworked, or burned out trying to keep pace with AI-enhanced metrics or expectations, which adversely impacts morale, mental health, and long-term safety culture.

4. The ethics-safety paradox

Deploying AI for safety requires striking a balance: algorithms must help without replacing human judgment entirely. Systems like Intenseye emphasize transparency, audit trails, and human oversight in their AI-powered safety platforms.

Part III: Navigating the Storm, Strategies for Workers, Organizations, and Policy

1. For workers: adapt, reskill, stay vigilant

- Reskill proactively: Focus on developing human-centered skills including, creativity, judgment, ethics, and emotional intelligence. These are the areas where AI (so far) struggles.

- Adopt AI as a collaborator, not competitor: Embrace AI tools to automate tedious sub-tasks, freeing human effort for higher-value work.

- Be alert to risks: If AI monitoring or performance evaluation tools emerge at work, push for transparency and human oversight.

- Advocate for safety protocols: In industries using AI in operational settings, insist on human override, robust testing, and participatory safety governance.

2. For organizations: design responsibly

- Task redesign over task replacement: Rather than imposing AI onto existing workflows, rethink jobs so that AI and human strengths complement each other.

- Transparent AI systems: Use explainable AI, audit trails, and human-in-the-loop systems; especially in safety and oversight applications.

- Robust safety protocols: In AI-empowered physical environments, perform rigorous validation, simulate edge cases, allow fail-safes and human override. Consult with the best IT company in India and design work-safety protocols.

- Ethical surveillance design: If using performance tracking or AI-driven HR tools, ensure that worker privacy, fairness, and trust are prioritized.

- Worker inclusion: Engage employees in AI adoption decisions; their experience and feedback shape better systems.

3. For policymakers and regulators

- Data and labor monitoring: Promote longitudinal studies to track AI’s real effects on employment over longer horizons.

- Regulation of AI in safety-critical systems: Mandate standards for explainability, fail-safe behavior, and human oversight when AI is used in high-risk workplaces.

- Incentives for responsible AI: Provide tax or funding incentives for organizations that deploy AI with strong worker protections and retraining programs.

- Social safety nets: Strengthen unemployment insurance, reemployment programs, and public reskilling initiatives to cushion transitions.

- Worker rights in algorithmic workplaces: Enact rules to limit exploitative AI surveillance and algorithmic management without transparency or recourse.

Part IV: The Verdict

On the employment front, early data suggests that AI has not yet triggered mass layoffs or wholesale job loss; the more visible effect is task shift within jobs. The Yale study undercuts the idea that AI is already eroding demand for cognitive labor at some sweeping scale.

However, that equilibrium may shift over time; especially as AI tools become exponentially more capable and diffused across sectors.

On the safety front, AI holds promise to reduce human exposure to hazards; but great risk lies in misimplementation, black-box algorithms, and surveillance regimes that neglect human oversight and psychosocial well-being.

Organizations like Grizon Tech spearhead innovation, they too must weigh their ambitions against responsibility. We have the opportunity to lead not just in advancing AI capabilities, but in designing workplaces where humans and machines thrive safely together.

When a company drives its mission in both technological excellence and human dignity, it can prove that the future need not be a zero-sum game between AI and people; but a smarter, safer, and more equitable collaboration.